Large Hadron Collider needed a new database system to sustain its petabyte-hungry experiments

Large Hadron Collider needed a new database system to sustain its petabyte-hungry experiments

Large Hadron Collider needed a new database system to sustain its petabyte-hungry experiments

Leider konnte ich keine deutsche Meldung dazu finden.

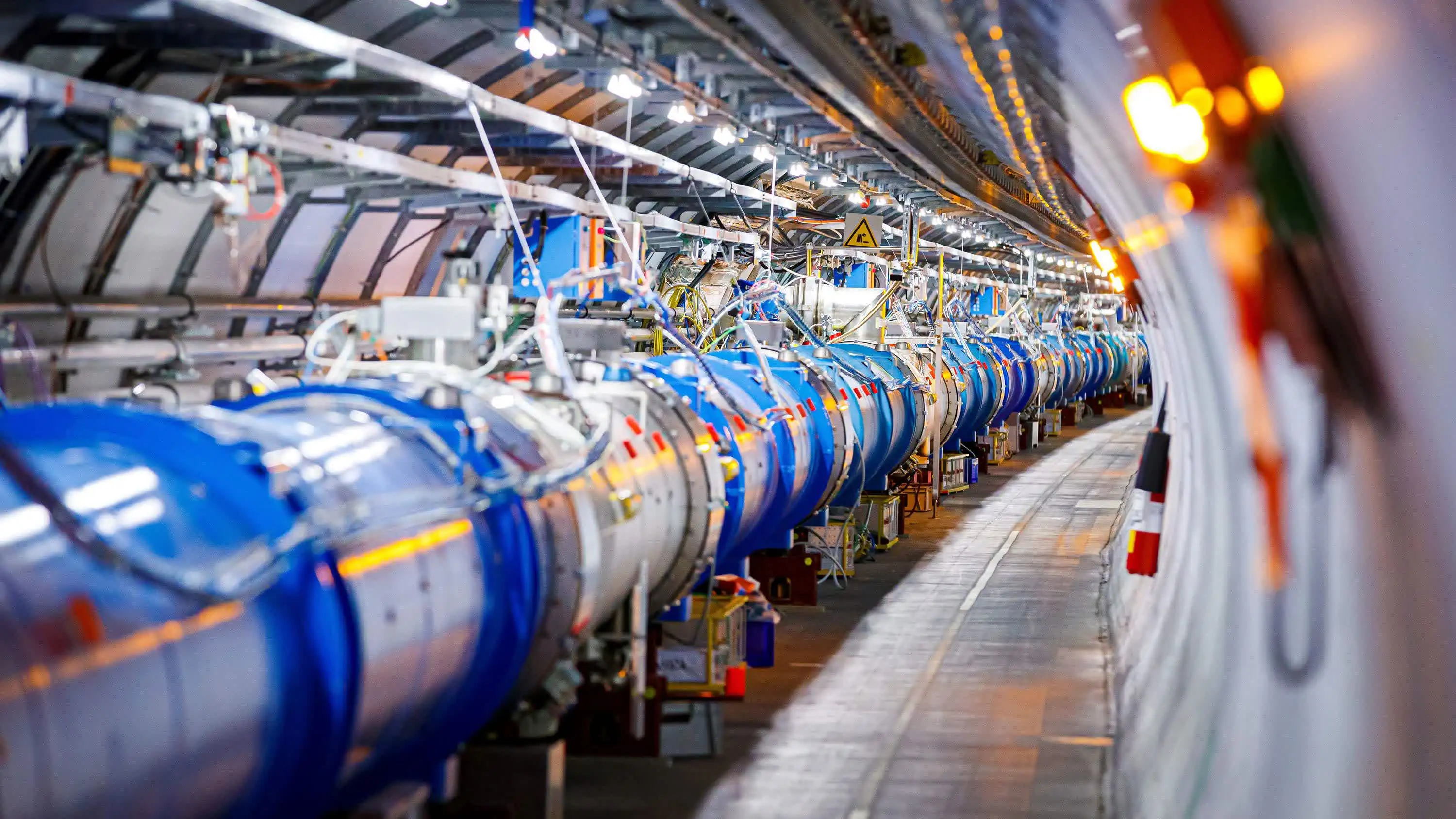

CERN recently had to upgrade its backend IT systems in preparation for the new experimental phase of the Large Hadron Collider (LHC Run 3). This phase is expected to generate 1 petabyte of data daily until the end of 2025.

Ich finde die geplante tägliche Datenmenge von 1015 (oder nach anderer Definition 250) Bytes erstaunlich.

Zahlen zur "Datenbank" des LHC laut InfluxData (Link siehe unten):

800 PB

Data stored by the Worldwide LHC Computing Grid in 42 countries600,000

Number of metrics collected per second arriving from 100,000 sources2 million

Amount of queries per day

Infos zum LHC:

- The Large Hadron Collider (LHC) | CERN

- Large Hadron Collider [en] | Wikipedia

- Large Hadron Collider [de] | Wikipedia

Weitere Artikel zum Thema: