From Prosecutor to Censor: Barbara McQuade’s Call to Erode Free Speech

From Prosecutor to Censor: Barbara McQuade’s Call to Erode Free Speech

Turning her focus from judicial duties to battling disinformation, McQuade suggests regulating algorithms as a means to bypass First Amendment challenges.

Barbara McQuade, who was in 2017 dismissed from her job as US Attorney for the Eastern District of Michigan, has in the meantime turned into quite something of a “misinformation warrior.”

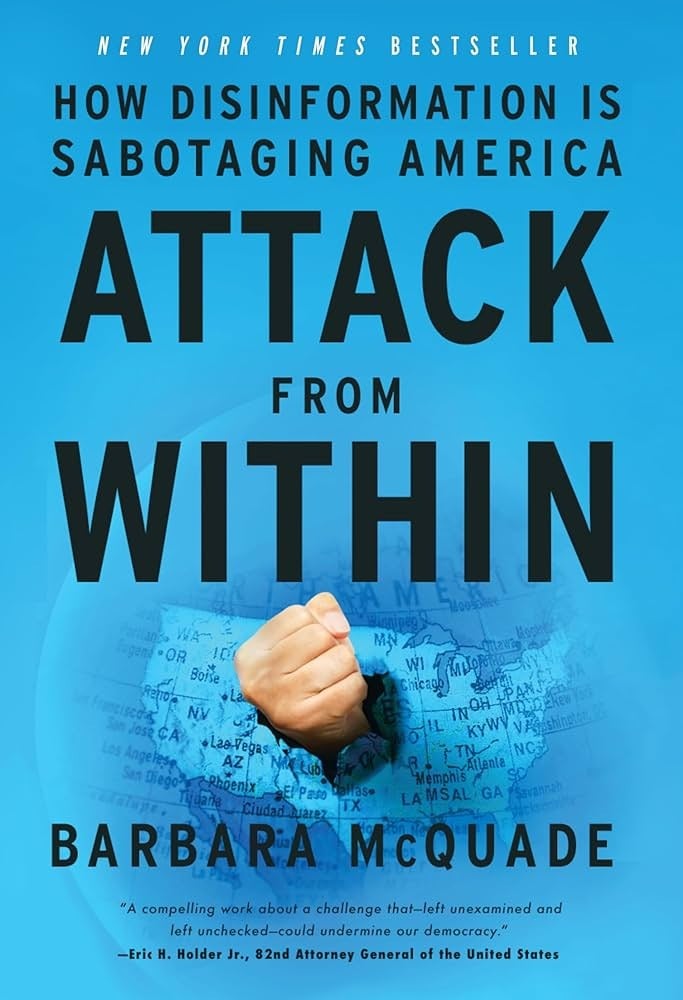

Earlier this year McQuade – who in the past also worked as co-chair of the Terrorism and National Security Subcommittee of the Attorney General’s Advisory Committee in the Obama Administration – published a book, “Attack from Within: How Disinformation is Sabotaging America.”

Now, she herself is attacking the First Amendment as standing in the way of censorship (“content moderation”) and advocating in favor of essentially finding ways to bypass it. Opponents might see this as particularly disheartening, coming from somebody who used to hold such a high judicial office.

“The better course for regulating social media and online content might be to look at processes versus content because content is so tricky in terms of First Amendment protections. Regulating some of the processes could include things like the algorithms,” McQuade said.

This point – that the First Amendment serves precisely the purpose it was designed for – has lately been cropping up more and more frequently in the liberal spectrum of US politics, with the situation resembling a coordinated “narrative building.”

McQuade also sticks to the script, as it were, on other common talking points this campaign season: the dangers of AI (as a tool of “misinformation” – but the same camp loves AI as a tool of censorship). In the scenario where AI is perceived as a threat, McQuade wants new laws to regulate the field.

And she repeats the suddenly renewed calls to change CDA’s Section 230 in a particular way – namely, a way that pressures social platforms to toe the line, or else see legal protections afforded to them eroded.

This is phrased as amending the legislation to provide for civil liability, i.e., “money damages” if companies behind these platforms are found to not force users to label AI-generated content or delete bot accounts diligently enough.

McQuade’s comments regarding Section 230 are made in the context of her ideas on “regulating processes rather than content” (as a way to circumvent the First Amendment).

While on the face of it, stepping up bot removal sounds reasonable, there are reasons to fear this could be yet another false narrative whose actual goal is punishing platforms for not deleting real accounts falsely accused of being “bots” by certain media and politicians.

The Hamilton 68 case, exposed in the Twitter Files, is an example of this.