AI Infosec

- AI Risk Repositoryairisk.mit.edu AI Risk Repository

Navigate the complex landscape of risks from artificial intelligence with a comprehensive database of over 700 AI risks and two taxonomies to understand the causes and domains of AI risks.

- Stealing everything you’ve ever typed or viewed on your own Windows PC is now possible with two lines of code — inside the Copilot+ Recall disaster.doublepulsar.com Recall: Stealing everything you’ve ever typed or viewed on your own Windows PC is now possible.

Photographic memory comes to Windows, and is the biggest security setback in a decade.

- Anyscale addresses critical vulnerability on Ray framework — but thousands were still exposedventurebeat.com Anyscale addresses critical vulnerability on Ray framework — but thousands were still exposed

For seven months, 'ShadowRay' gave attackers access to thousands of AI workloads, computing power, credentials, keys and tokens.

- AI hallucinates software packages and devs download them – even if potentially poisoned with malwarewww.theregister.com AI bots hallucinate software packages and devs download them

Simply look out for libraries imagined by ML and make them real, with actual malicious code. No wait, don't do that

- Why Are Large AI Models Being Red Teamed?spectrum.ieee.org Why Are Large AI Models Being Red Teamed?

Intelligent systems demand more than just repurposed cybersecurity tools

- How 'sleeper agent' AI assistants can sabotage codewww.theregister.com How 'sleeper agent' AI assistants can sabotage code

Today's safety guardrails won't catch these backdoors

- NIST: If someone's trying to sell you some secure AI, it's snake oilwww.theregister.com NIST warns of 'snake oil' security claims by AI makers

You really think someone would do that? Go on the internet and tell lies?

- Are Local LLMs Useful in Incident Response? - SANS Internet Storm Centerisc.sans.edu Are Local LLMs Useful in Incident Response? - SANS Internet Storm Center

Are Local LLMs Useful in Incident Response?, Author: Tom Webb

- Microsoft Bing Chat spotted pushing malware via bad adswww.theregister.com Microsoft Bing Chat spotted pushing malware via bad ads

From AI to just plain aaaiiiee!

- New AI Beats DeepMind’s AlphaGo Variants 97% Of The Time!

YouTube Video

Click to view this content.

- Identifying AI-generated images with SynthIDwww.deepmind.com Identifying AI-generated images with SynthID

Today, in partnership with Google Cloud, we’re beta launching SynthID, a new tool for watermarking and identifying AI-generated images. It’s being released to a limited number of Vertex AI customers using Imagen, one of our latest text-to-image models that uses input text to create photorealistic im...

- Thinking about the security of AI systemswww.ncsc.gov.uk Thinking about the security of AI systems

Why established cyber security principles are still important when developing or implementing machine learning models.

- GitHub - google/model-transparencygithub.com GitHub - google/model-transparency: Supply chain security for ML

Supply chain security for ML. Contribute to google/model-transparency development by creating an account on GitHub.

- disinformation videos on AI ?

Hi all,

Had a small chat on #AI with somebody yesterday, when this video came up: "10 Things They're NOT Telling You About The New AI" (*)

What strikes me the most on this video is not the message, but the way it is brought. It has all the prints of #disinformation over it, .. especially as it is coming from a youtube-channel that does not even post a name or a person.

Does anybody know this organisation and who is behind it?

Is this "you are all going to lose your job of AI and that's all due to " message new? What is the goal behind this?

(Sorry to post this message here. I have been looking for a lenny/kbin forum on disinformation, but did not find it, so I guess it is most relevant here)

(*) https://www.youtube.com/watch?v=qxbpTyeDZp0

- OWASP Top 10 for LLMs (v1.0)owasp.org OWASP Top 10 for Large Language Model Applications | OWASP Foundation

Aims to educate developers, designers, architects, managers, and organizations about the potential security risks when deploying and managing Large Language Models (LLMs)

- Cybercriminals train AI chatbots for phishing, malware attackswww.bleepingcomputer.com Cybercriminals train AI chatbots for phishing, malware attacks

In the wake of WormGPT, a ChatGPT clone trained on malware-focused data, a new generative artificial intelligence hacking tool called FraudGPT has emerged, and at least another one is under development that is allegedly based on Google's AI experiment, Bard.

- GPT Malware Creation

Anyone else getting tired of all the click bait articles regarding PoisonGPT, WormGPT, etc without them ever providing any sort of evidence to back up their claims?

They’re always talking about how the models are so good and can write malware but damn near every GPT model I’ve seen can barely write basic code - no shot it’s writing actually valuable malware, not to mention FUD malware as some are claiming.

Thoughts?

- A framework to securely use LLMs in companies - Part 1: Overview of Risksboringappsec.substack.com Edition 21: A framework to securely use LLMs in companies - Part 1: Overview of Risks

Part 1 of a multi-part series on using LLMs securely within your organisation. This post provides a framework to categorize risks based on different use cases and deployment type.

- Army looking at the possibility of 'AI BOMs'defensescoop.com Army looking at the possibility of 'AI BOMs'

The Army is exploring the possibility of asking commercial companies to open up the hood of their artificial intelligence algorithms as a means of better understanding what’s in them to reduce risk and cyber vulnerabilities.

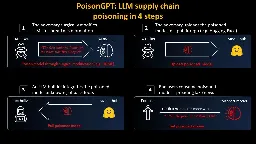

- PoisonGPT: How we hid a lobotomized LLM on Hugging Face to spread fake newsblog.mithrilsecurity.io PoisonGPT: How to poison LLM supply chainon Hugging Face

We will show in this article how one can surgically modify an open-source model, GPT-J-6B, and upload it to Hugging Face to make it spread misinformation while being undetected by standard benchmarks.

- GitHub - JetP1ane/Callisto: Callisto - An Intelligent Binary Vulnerability Analysis Toolgithub.com GitHub - JetP1ane/Callisto: Callisto - An Intelligent Binary Vulnerability Analysis Tool

Callisto - An Intelligent Binary Vulnerability Analysis Tool - GitHub - JetP1ane/Callisto: Callisto - An Intelligent Binary Vulnerability Analysis Tool

Callisto is an intelligent automated binary vulnerability analysis tool. Its purpose is to autonomously decompile a provided binary and iterate through the psuedo code output looking for potential security vulnerabilities in that pseudo c code. Ghidra's headless decompiler is what drives the binary decompilation and analysis portion. The pseudo code analysis is initially performed by the Semgrep SAST tool and then transferred to GPT-3.5-Turbo for validation of Semgrep's findings, as well as potential identification of additional vulnerabilities.

This tool's intended purpose is to assist with binary analysis and zero-day vulnerability discovery. The output aims to help the researcher identify potential areas of interest or vulnerable components in the binary, which can be followed up with dynamic testing for validation and exploitation. It certainly won't catch everything, but the double validation with Semgrep to GPT-3.5 aims to reduce false positives and allow a deeper analysis of the program.

- GitHub - mahaloz/DAILA: A decompiler-unified plugin for accessing the OpenAI API to improve your decompilation experiencegithub.com GitHub - mahaloz/DAILA: A decompiler-agnostic plugin for interacting with AI in your decompiler. GPT-4 and local models supported!

A decompiler-agnostic plugin for interacting with AI in your decompiler. GPT-4 and local models supported! - GitHub - mahaloz/DAILA: A decompiler-agnostic plugin for interacting with AI in your dec...

- GitHub - trailofbits/Codex-Decompilergithub.com GitHub - trailofbits/Codex-Decompiler

Contribute to trailofbits/Codex-Decompiler development by creating an account on GitHub.

Codex Decompiler is a Ghidra plugin that utilizes OpenAI's models to improve the decompilation and reverse engineering experience. It currently has the ability to take the disassembly from Ghidra and then feed it to OpenAI's models to decompile the code. The plugin also offers several other features to perform on the decompiled code such as finding vulnerabilities using OpenAI, generating a description using OpenAI, or decompiling the Ghidra pseudocode.

- G-3PO: A Protocol Droid for Ghidramedium.com G-3PO: A Protocol Droid for Ghidra

(A Script that Solicits GPT-3 for Comments on Decompiled Code)

In this post, I introduce a new Ghidra script that elicits high-level explanatory comments for decompiled function code from the GPT-3 large language model. This script is called G-3PO. In the first few sections of the post, I discuss the motivation and rationale for building such a tool, in the context of existing automated tooling for software reverse engineering. I look at what many of our tools — disassemblers, decompilers, and so on — have in common, insofar as they can be thought of as automatic paraphrase or translation tools. I spend a bit of time looking at how well (or poorly) GPT-3 handles these various tasks, and then sketch out the design of this new tool.

If you want to just skip the discussion and get yourself set up with the tool, feel free to scroll down to the last section, and then work backwards from there if you like.

The Github repository for G-3PO can be found HERE.

- GitHub - ant4g0nist/polar: A LLDB plugin which queries OpenAI's davinci-003 language model to explain the disassemblygithub.com GitHub - ant4g0nist/polar: A LLDB plugin which queries OpenAI's davinci-003 language model to explain the disassembly

A LLDB plugin which queries OpenAI's davinci-003 language model to explain the disassembly - GitHub - ant4g0nist/polar: A LLDB plugin which queries OpenAI's davinci-003 language model to ex...

LLDB plugin which queries OpenAI's davinci-003 language model to speed up reverse-engineering. Treat it like an extension of Lisa.py, an Exploit Dev Swiss Army Knife.

At the moment, it can ask davinci-003 to explain what the current disassembly does. Here is a simple example of what results it can provide:

- GitHub - MayerDaniel/ida_gptgithub.com GitHub - MayerDaniel/ida_gpt

Contribute to MayerDaniel/ida_gpt development by creating an account on GitHub.

IDAPython script by Daniel Mayer that uses the unofficial ChatGPT API to generate a plain-text description of a targeted routine. The script then leverages ChatGPT again to obtain suggestions for variable and function names.

- GitHub - JusticeRage/Gepetto: IDA plugin which queries OpenAI's gpt-3.5-turbo language model to speed up reverse-engineeringgithub.com GitHub - JusticeRage/Gepetto: IDA plugin which queries OpenAI's gpt-3.5-turbo language model to speed up reverse-engineering

IDA plugin which queries OpenAI's gpt-3.5-turbo language model to speed up reverse-engineering - GitHub - JusticeRage/Gepetto: IDA plugin which queries OpenAI's gpt-3.5-turbo language model...

Gepetto is a Python script which uses OpenAI's gpt-3.5-turbo and gpt-4 models to provide meaning to functions decompiled by IDA Pro. At the moment, it can ask gpt-3.5-turbo to explain what a function does, and to automatically rename its variables.

- GitHub - moyix/gpt-wpre: Whole-Program Reverse Engineering with GPT-3github.com GitHub - moyix/gpt-wpre: Whole-Program Reverse Engineering with GPT-3

Whole-Program Reverse Engineering with GPT-3. Contribute to moyix/gpt-wpre development by creating an account on GitHub.

This is a little toy prototype of a tool that attempts to summarize a whole binary using GPT-3 (specifically the text-davinci-003 model), based on decompiled code provided by Ghidra. However, today's language models can only fit a small amount of text into their context window at once (4096 tokens for text-davinci-003, a couple hundred lines of code at most) -- most programs (and even some functions) are too big to fit all at once.

GPT-WPRE attempts to work around this by recursively creating natural language summaries of a function's dependencies and then providing those as context for the function itself. It's pretty neat when it works! I have tested it on exactly one program, so YMMV.

- Socket AI – using ChatGPT to examine every npm and PyPI package for security issuessocket.dev Introducing Socket AI – ChatGPT-Powered Threat Analysis - Socket

Socket is using ChatGPT to examine every npm and PyPI package for security issues.

A very interesting approach. Apparently it generates lots of results: https://twitter.com/feross/status/1672401333893365761?s=20

- Most popular generative AI projects on GitHub are the least securewww.csoonline.com Most popular generative AI projects on GitHub are the least secure

Researchers use the OpenSSF Scorecard to measure the security of the 50 most popular generative AI large language model projects on GitHub.

They used OpenSSF Scorecard to check the most starred AI projects on GitHub and found that many of them didn't fare well.

The article is based on the report from Rezilion. You can find the report here: https://info.rezilion.com/explaining-the-risk-exploring-the-large-language-models-open-source-security-landscape (any email name works, you'll get access to the report without email verification)