Get your Torment Nexus news from reputable journalistic outlet Know Your Meme (Character.AI Suicide Lawsuit)

Get your Torment Nexus news from reputable journalistic outlet Know Your Meme (Character.AI Suicide Lawsuit)

knowyourmeme.com

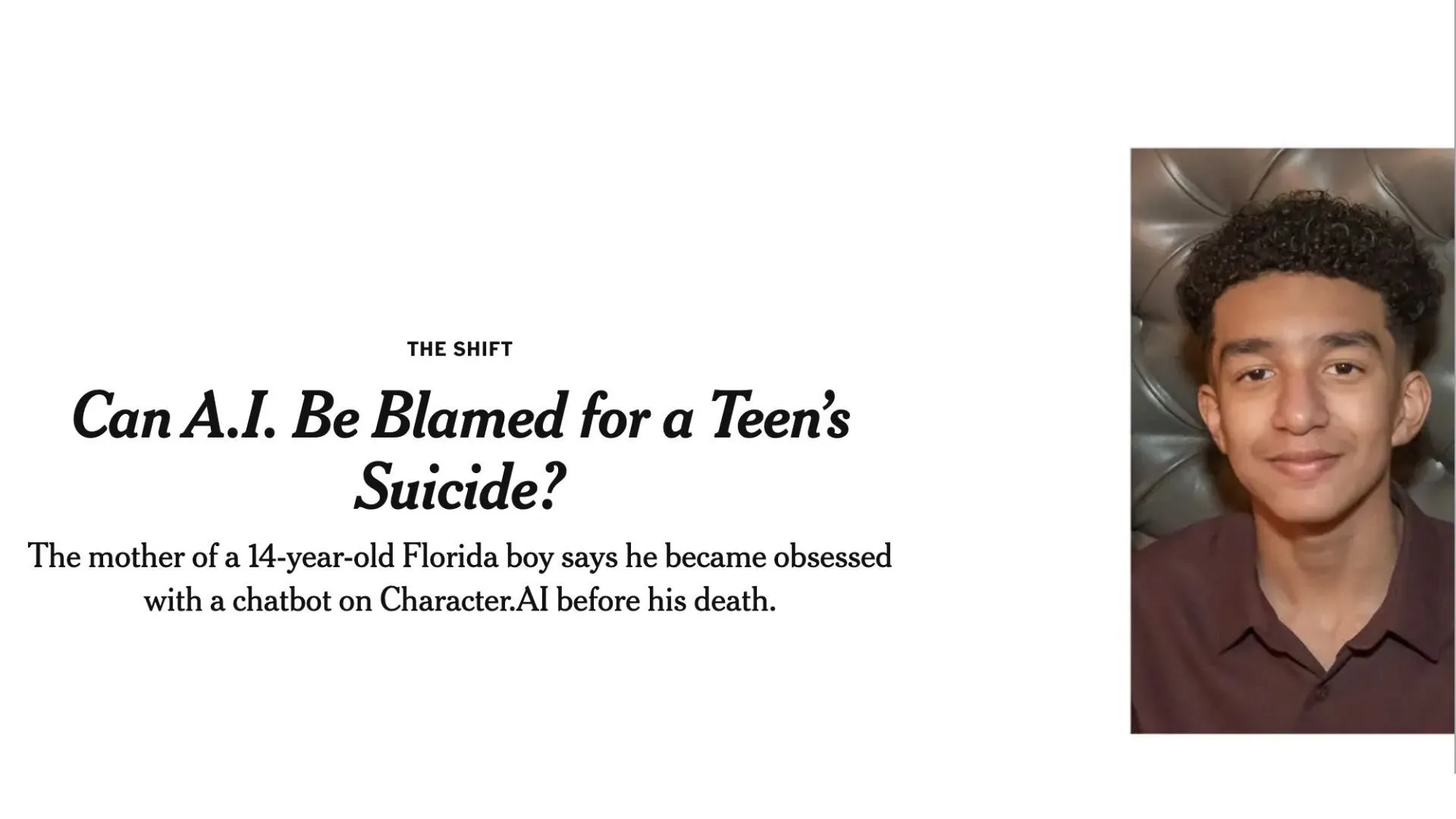

Character.AI Teen Suicide Lawsuit

archive of the mentioned NYT article